Sound modulation in V1 depends on the behavioral relevance of the visual cue

McClure J.P., Erkat O.B., Corbo J., and Polack P-O. (2022) Estimating how Sounds Modulate Orientation Representation in the Primary Visual Cortex using Shallow Neural Networks. Frontiers in Systems Neuroscience. 16(869705):1-15.

Audiovisual perception results from the interaction between visual and auditory processing. Hence, presenting auditory and visual inputs simultaneously usually improves the accuracy of the unimodal percepts, but can also lead to audiovisual illusions. Cross-talks between visual and auditory inputs during sensory processing were recently shown to occur as early as in the primary visual cortex (V1). In a previous study, we demonstrated that sounds improve the representation of the orientation of visual stimuli in the naïve mouse V1 by promoting the recruitment of neurons better tuned to the orientation and direction of the visual stimulus. However, we did not test if this type of modulation was still present when the auditory and visual stimuli were both behaviorally relevant. To determine the effect of sounds on active visual processing, we performed calcium imaging in V1 while mice were performing an audiovisual task. We then compared the representations of the task stimuli orientations in the unimodal visual and audiovisual context using shallow neural networks (SNNs). SNNs were chosen because of the biological plausibility of their computational structure and the possibility of identifying post hoc the biological neurons having the strongest influence on the classification decision. We first showed that SNNs can categorize the activity of V1 neurons evoked by drifting gratings of 12 different orientations. Then, we demonstrated using the connection weight approach that SNN training assigns the largest computational weight to the V1 neurons having the best orientation and direction selectivity. Finally, we showed that it is possible to use SNNs to determine how V1 neurons represent the orientations of stimuli that do not belong to the set of orientations used for SNN training. Once the SNN approach was established, we replicated the previous finding that sounds improve orientation representation in the V1 of naïve mice. Then, we showed that, in mice performing an audiovisual detection task, task tones improve the representation of the visual cues associated with the reward while deteriorating the representation of non-rewarded cues. Altogether, our results suggest that the direction of sound modulation in V1 depends on the behavioral relevance of the visual cue.

Figure

Caption

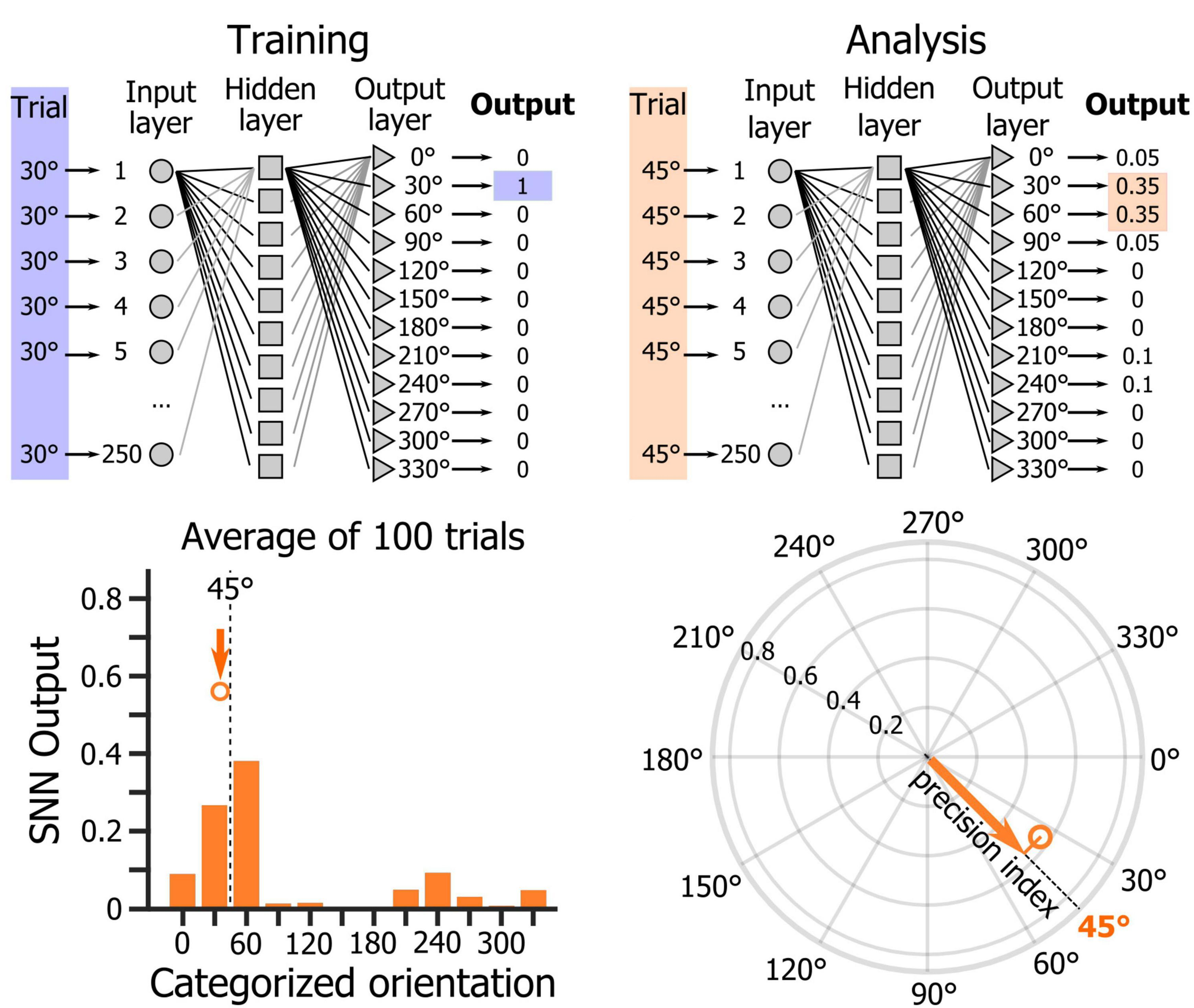

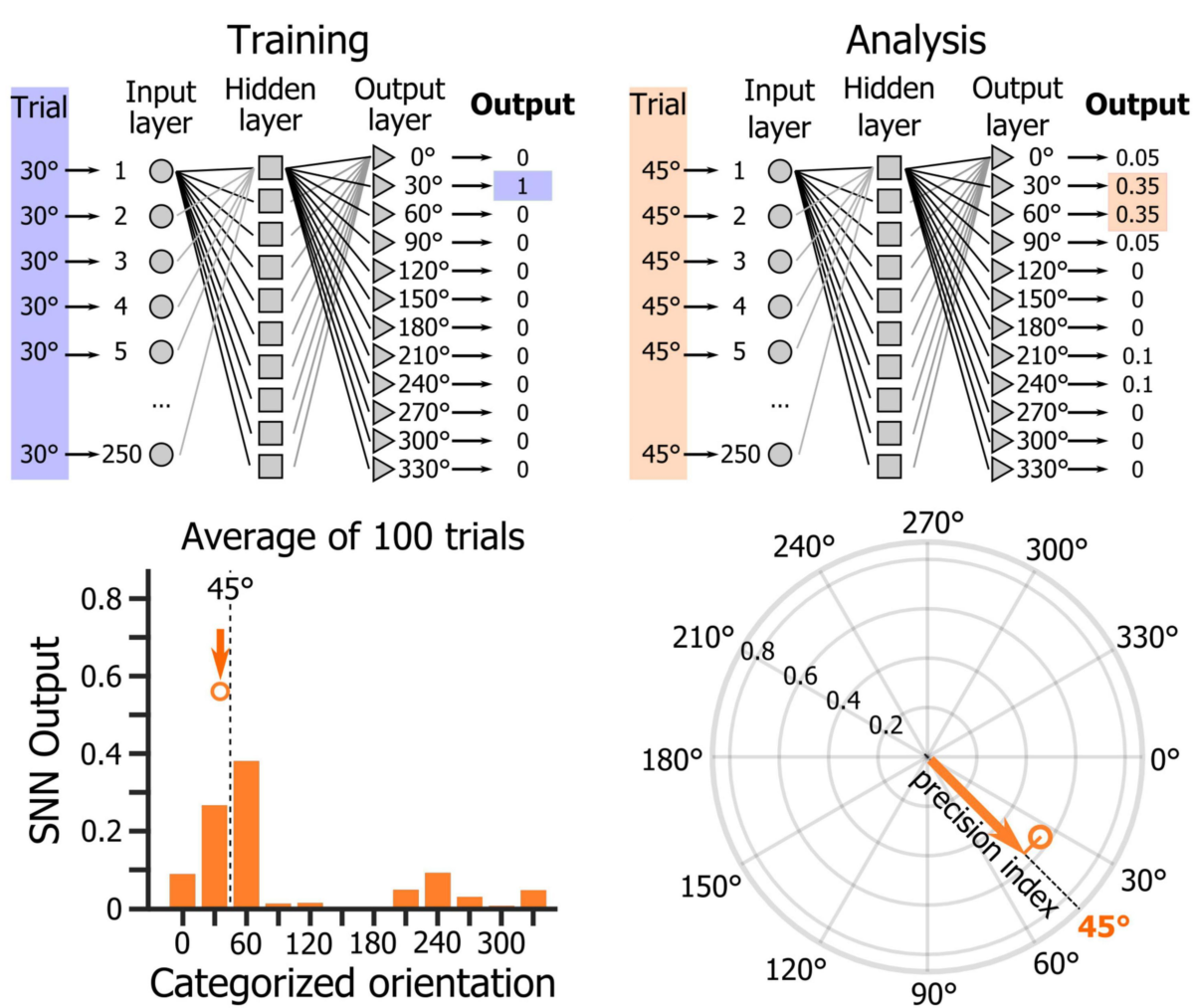

Top left panel. Schematic representation of the shallow neural network (SNN) training. Top right panel. Schematic representation of the SNN testing with a 45° stimulus that does not belong to the training categories. Bottom left panel. Average output across 100 trials of the presentation of a 45° drifting grating of a randomly selected trained SNN. The dot indicates the orientation and length of the circular mean vector computed from the mean distribution of the SNN output. Bottom right panel. Precision index defined as the length of the vector resulting from the projection of the circular mean vector onto the axis of the visual stimulus orientation.